Less than a week after it imposed search limits on the AI version of its Bing search engine, Microsoft is raising those limits.

In the wake of some embarrassing reports of erratic behavior by the new Bing, Microsoft decided last Friday to limit a user’s daily usage to five chat “turns” per session and 50 turns per day.

A turn consists of a question by a user and an answer by Bing. At the completion of five turns, users are prompted to change the subject of their conversation with the AI.

The changes were necessary because the underlying AI model used by the new Bing can get confused by long chat sessions made up of many turns, the company explained in its Bing Blog.

However, on Tuesday, after a hue and cry from Bing users, Microsoft raised the usage limits to six turns a session and 60 turns per day.

The new limits will enable the vast majority of users to use the new Bing naturally, the company blogged.

“That said, our intention is to go further, and we plan to increase the daily cap to 100 total chats soon,” it added.

“In addition,” it continued, “with this coming change, your normal searches will no longer count against your chat totals.”

Crowd Input Needed

Microsoft decided to impose limits on the usage of the AI-powered Bing after some users found ways to goad the search engine to call them an enemy and even get it to double down on errors of fact it has made, such as the name of Twitter’s CEO.

“[W]e have found that in long, extended chat sessions of 15 or more questions, Bing can become repetitive or be prompted/provoked to give responses that are not necessarily helpful or in line with our designed tone,” Microsoft acknowledged in a blog.

With the new limits on Bing AI usage, the company may be admitting something else. “It indicates they didn’t adequately predict some of the responses and turns this took,” Greg Sterling, co-founder of Near Media, a news, commentary, and analysis website, told TechNewsWorld.

“Despite the horror stories written about the new Bing, there’s a lot of productivity being gained with it, which points to the usefulness of such a tool in certain content scenarios,” maintained Jason Wong, a vice president and analyst with Gartner.

“For a lot of software companies, you’re not going to know what you’re going to get until you release your software to the public,” Wong told TechNewsWorld.

“You can have all sorts of testing,” he said. “You can have teams doing stress tests on it. But you’re not going to know what you have until the crowd gets to it. Then, hopefully, you can discern some wisdom from the crowd.”

Wong cited a lesson learned by Reid Hoffman, founder of LinkedIn, “If you are not embarrassed by the first version of your product, you’ve launched too late.”

Google Too Cautious With Bard?

Microsoft’s decision to launch its AI search vehicle with potential warts contrasts with the more cautious approach taken by Google with its Bard AI search product.

“Bing and Google are in different positions,” Sterling explained. “Bing needs to take more chances. Google has more to lose and will be more cautious as a result.”

But is Google being too cautious? “It depends on what kind of rabbit they have in their hat,” observed Will Duffield, a policy analyst at the Cato Institute.

“You’re only being too cautious if you have a really good rabbit and you don’t let it out,” Duffield told TechNewsWorld. “If your rabbit’s not ready, there’s nothing over-cautious about keeping it back.”

“If they have something good and they release it, then maybe people will say they should have launched it months ago. But maybe months ago, it wasn’t as good,” he added.

Threat to Workers

Microsoft also blogged that it was going to begin testing a Bing AI option that lets a user choose the tone of a chat from “precise” — which will use Microsoft’s proprietary AI technology to focus on shorter, more search-focused answers — to “balanced” and “creative” — which will use ChatGPT to give a user longer and more chatty answers.

The company explained that the goal is to give users more control over the type of chat behavior to best meet their needs.

“Choice is good in the abstract,” Sterling observed. “However, in these early days, the quality of ChatGPT answers may not be high enough.”

“So until the guardrails are reinforced, and ChatGPT accuracy improves, it may not be such a great thing,” he said. “Bing will have to manage expectations and disclaim ChatGPT content to some degree.”

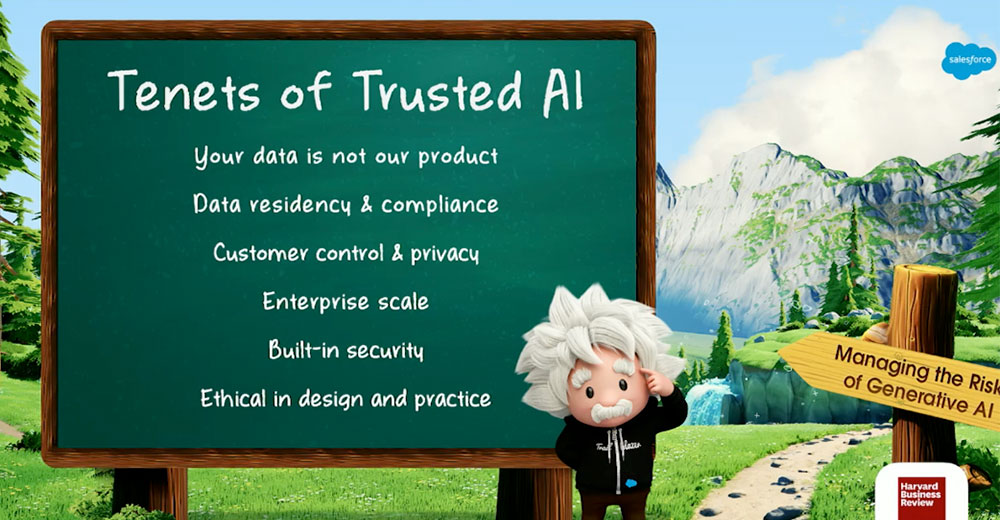

In a related matter, a survey of 1,000 business leaders released Tuesday by Resume Builder found 49% of their companies are using ChatGPT; 30% plan to, and of the companies using the AI technology, 48% say it has replaced workers. The following charts reveal more data on how companies are using ChatGPT.

Copilot for Humans

Sterling was skeptical of the replaced workers finding in the survey. “I think lots of companies are testing it. So in that sense, companies are ‘using’ it,” he noted.

“And some companies may recognize ways it can save time or money and potentially replace manual work or outsourcing,” he continued. “But the survey results lack context and are only presenting partial information.”

He acknowledged, however, that hiring and freelancing patterns will change over time due to AI.

Wong found the number of businesses using ChatGPT unsurprising, but not so with the replacing people number.

“I can see not having someone write documentation for an update to an application or portal, but to downsize or shift people out of a role because they’re using ChatGPT I would find hard to believe,” he said.

“Gartner’s advice to clients exploring ChatGPT and Bing chat is to think of them as copilots,” he continued. “It’s going to help create something that should be reviewed by a human, by someone who is going to assess the validity of an answer.”

“In only a small amount of use cases could they replace a human,” he concluded.